As greatest cpu for industrial machine studying takes heart stage, this opening passage beckons readers right into a world crafted with good information, guaranteeing a studying expertise that’s each absorbing and distinctly unique.

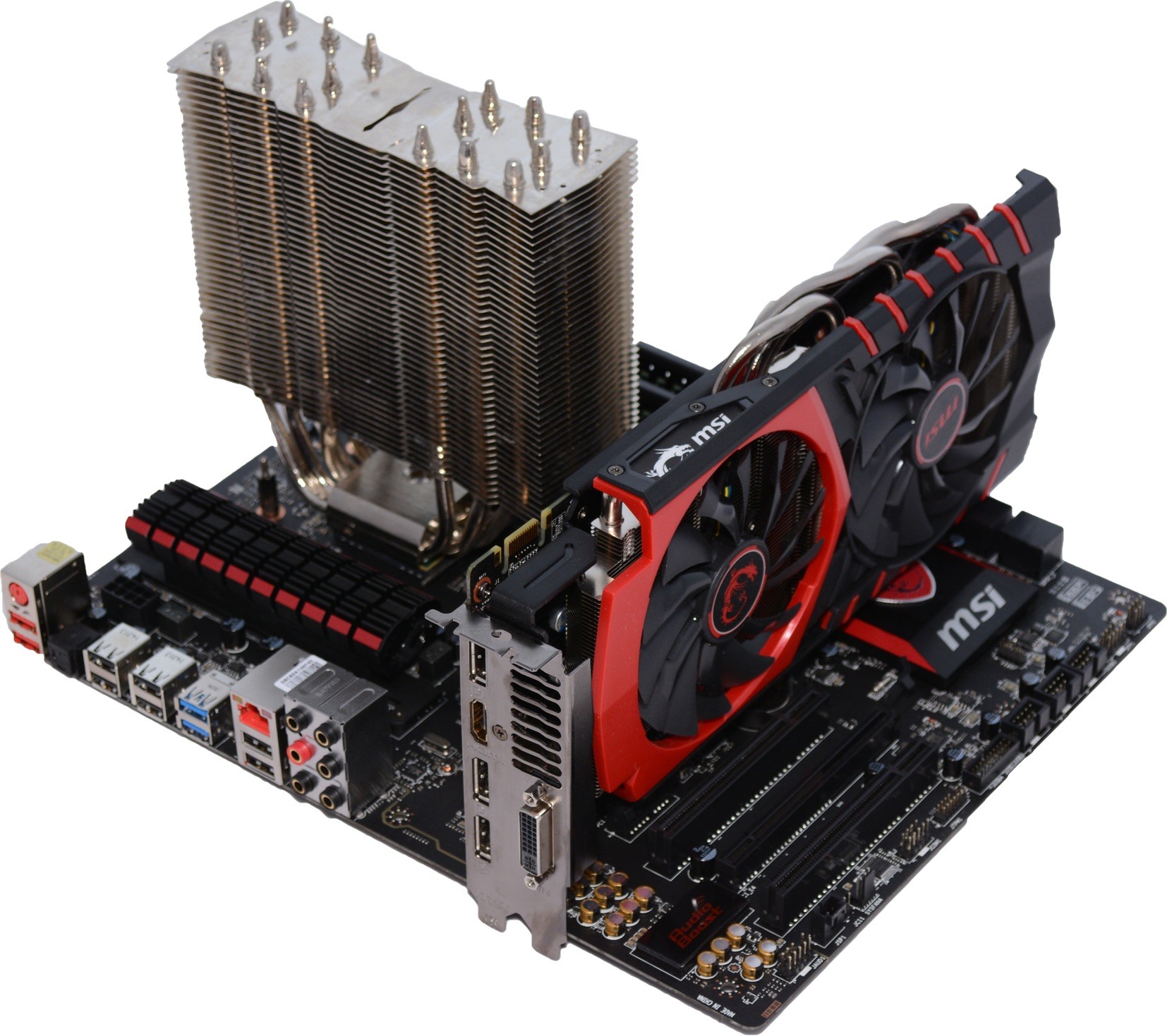

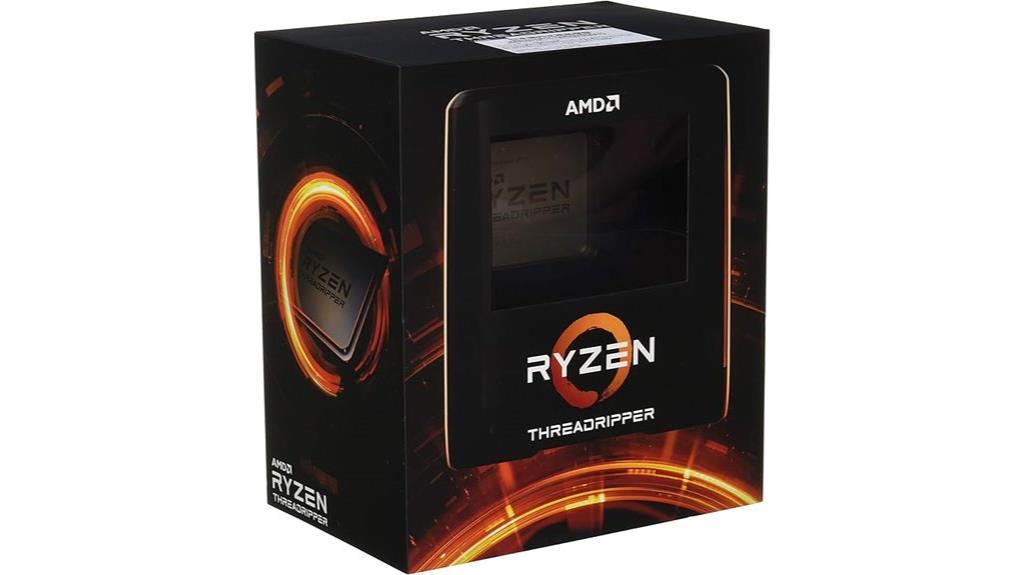

The importance of CPU structure and core rely in industrial machine studying purposes can’t be overstated. A well-designed CPU can considerably speed up machine studying efficiency, scale back vitality consumption, and enhance scalability. On this article, we are going to delve into the most effective CPU choices for industrial machine studying, highlighting the important thing options and issues for every.

Accelerating Industrial Machine Studying with the Proper CPU

In industrial machine studying, the proper CPU could make all of the distinction between a clean and environment friendly workflow and a painstakingly sluggish and error-prone one. With an rising variety of companies counting on machine studying for decision-making and automation, the demand for high-performance CPUs has by no means been increased.

Significance of CPU Structure and Core Depend

In terms of industrial machine studying, CPU structure and core rely play a vital position in figuring out the general efficiency of the system. The CPU structure refers back to the design and group of the processor, together with the scale and sort of cache reminiscence, the variety of execution items, and the instruction set structure (ISA). A well-designed CPU structure can considerably enhance the efficiency of machine studying workloads, which are sometimes characterised by huge quantities of knowledge and complicated computations.

In industrial machine studying purposes, the next core rely can present higher multitasking capabilities and improved general efficiency. With extra cores, the system can deal with a number of duties concurrently, making it simpler to coach and deploy machine studying fashions. Nevertheless, the variety of cores is just not the one issue that determines efficiency; the standard of the cores and the CPU structure itself additionally play a major position.

Impression of CPU Cache Hierarchy on Machine Studying Efficiency

The CPU cache hierarchy refers back to the group of cache reminiscence inside the CPU. The cache reminiscence is a small, quick reminiscence that shops steadily accessed information, which might considerably scale back the time it takes to entry principal reminiscence. In industrial machine studying purposes, a well-designed cache hierarchy can enhance efficiency by lowering the variety of reminiscence accesses.

The CPU cache hierarchy usually consists of three ranges of cache: L1, L2, and L3. The L1 cache is the smallest and quickest, whereas the L3 cache is the biggest and slowest. When information is accessed, the CPU first checks the L1 cache, then the L2 cache, and at last the L3 cache. If the information is just not present in any of the caches, the CPU should entry principal reminiscence, which is usually a sluggish course of.

A great CPU cache hierarchy can considerably enhance machine studying efficiency by lowering the variety of reminiscence accesses. A bigger cache dimension may assist to enhance efficiency by lowering the variety of cache misses.

Accelerating Matrix Operations with CPU Options

Matrix operations are a elementary a part of machine studying, and plenty of CPUs supply specialised directions and {hardware} options to speed up these operations. For instance, the AVX (Superior Vector Extensions) instruction set permits CPUs to carry out matrix multiplications and different linear algebra operations in parallel, considerably enhancing efficiency.

The AVX-512 instruction set is an extension of AVX that gives much more superior matrix operations, together with help for matrix multiplication and matrix transpose. These directions can considerably enhance the efficiency of machine studying workloads that rely closely on matrix operations.

CPU Producers for Industrial Machine Studying

Each Intel and AMD supply high-performance CPUs which can be well-suited for industrial machine studying. Intel’s Xeon household presents a variety of CPUs with a number of cores and excessive clock speeds, making them ideally suited for machine studying workloads. AMD’s EPYC household additionally presents high-performance CPUs with a number of cores and excessive clock speeds, offering a aggressive choice to Intel’s choices.

When selecting a CPU for industrial machine studying, it is important to contemplate the precise wants of your utility. Elements to contemplate embody the kind of machine studying workload, the scale of the dataset, and the extent of parallelism required. By choosing the proper CPU to your wants, you possibly can guarantee optimum efficiency and effectivity in your industrial machine studying workflows.

Comparability of Intel and AMD CPUs for Industrial Machine Studying

Listed below are a couple of key variations between Intel and AMD CPUs for industrial machine studying:

–

| CPU | Core Depend | Clock Pace (GHz) | CPU Caches |

|---|---|---|---|

| Intel Xeon E5-2699 v4 | 36 | 2.2 | 24.75 MB |

| AMD EPYC 7742 | 64 | 2.25 | 288 MB |

As you possibly can see, each CPUs supply excessive clock speeds and enormous cache sizes, however the EPYC 7742 has a a lot increased core rely, making it extra appropriate for machine studying workloads that require huge parallelism.

In conclusion, choosing the proper CPU for industrial machine studying is crucial to making sure optimum efficiency and effectivity. By contemplating components reminiscent of CPU structure, core rely, and specialised directions, you possibly can select the most effective CPU to your wants and unlock the complete potential of your machine studying workflows.

Enhancing Efficiency with CPU-Optimized Machine Studying Frameworks and Instruments: Greatest Cpu For Industrial Machine Studying

In terms of machine studying on industrial scale, CPU efficiency performs a major position in figuring out the effectivity of duties like processing and coaching fashions. To unlock most CPU potential, the selection of framework and toolchain is crucial. On this part, we are going to discover among the most notable frameworks and toolchains optimized for CPU efficiency, highlighting their capabilities and advantages.

In style CPU-Optimized Machine Studying Frameworks

A few of the most generally used machine studying frameworks which can be optimized for CPU efficiency embody:

TensorFlow and PyTorch are the 2 most distinguished frameworks within the machine studying area, each providing help for CPU in addition to GPU acceleration.

-

TensorFlow, an open-source framework developed by Google, presents a variety of APIs and instruments that facilitate the creation and deployment of machine studying fashions on CPU-enabled {hardware}.

• PyTorch, one other open-source framework developed by Fb, is thought for its ease of use and gives a variety of instruments that facilitate environment friendly mannequin coaching and deployment on CPU {hardware}.

• MXNet, a extremely scalable and open-source framework developed by the Apache Software program Basis, helps each CPU and GPU acceleration and presents a modular design that makes it simple to combine with different frameworks.

• Caffe, a deep studying framework developed by the Berkeley Imaginative and prescient and Studying Heart, is optimized for CPU efficiency and gives a variety of instruments for coaching and deploying neural networks.

Toolchains for CPU-Optimized Machine Studying

To additional improve CPU efficiency, varied toolchains present help for multi-threading and parallel processing strategies, which allow environment friendly execution of CPU-intensive duties.

Toolchains like OpenBLAS, MKL, and OpenTBB facilitate CPU optimization by offering optimized implementations of linear algebra operations and different core math capabilities, in addition to parallel processing capabilities for task-level parallelism.

-

• OpenBLAS, an open-source optimized BLAS (Primary Linear Algebra Subprograms) library, gives extremely environment friendly and optimized implementations of linear algebra operations for CPU {hardware}.

• MKL, a high-performance math library developed by Intel, presents optimized implementations of linear algebra operations and different core math capabilities for CPU-enabled {hardware}.

• OpenTBB, an open-source library developed by Intel, gives a variety of parallel processing utilities for task-level parallelism on CPU {hardware}, enabling environment friendly execution of CPU-intensive duties.

Position of Information Locality and Reminiscence Hierarchy

Information locality and reminiscence hierarchy play a vital position in CPU-based machine studying efficiency, as they instantly affect the effectivity of knowledge entry and processing instances.

The idea of knowledge locality refers back to the proximity of knowledge to the processing unit, whereas the reminiscence hierarchy refers back to the group of reminiscence into totally different ranges with various entry instances.

-

• Caching and buffering mechanisms in fashionable CPUs can enhance information locality, enabling sooner entry to steadily wanted information.

• Utilizing information buildings with excessive spatial locality may scale back reminiscence entry latency and enhance general CPU efficiency.

Comparability of CPU-Optimized Libraries for Machine Studying

In terms of machine studying, CPU efficiency is usually a crucial think about figuring out the effectivity of duties like processing and coaching fashions. OpenBLAS and MKL are two well-liked libraries used for CPU optimization in machine studying.

This comparability highlights among the key variations between OpenBLAS and MKL, together with efficiency, options, and compatibility necessities.

| | OpenBLAS | MKL |

| — | — | — |

| Efficiency: | Excessive-performance optimization for linear algebra operations | Optimized implementations of linear algebra operations and different core math capabilities |

| Options: | Extremely customizable and extensible | Excessive-performance optimization for linear algebra operations and different core math capabilities |

| Compatibility: | Cross-platform compatibility with a number of CPU architectures | Optimized for Intel CPU architectures |

Notice that the selection of library in the end is determined by particular mission necessities, reminiscent of CPU structure, efficiency calls for, and compatibility wants.

Industrial Machine Studying Workflows and Scalability

On the earth of economic machine studying, scalability is vital. As organizations attempt to harness the facility of AI to drive enterprise development, they typically face important challenges in scaling their machine studying workloads. On this part, we’ll delve into the intricacies of designing and optimizing CPU-based machine studying workflows for high-performance computing, and discover the position of distributed computing and parallelization in large-scale machine studying duties.

Challenges of Scaling Machine Studying Workloads

Scaling machine studying workloads in industrial environments isn’t any simple feat. Listed below are among the key challenges:

- Information quantity and velocity challenges, the place the sheer quantity and pace of knowledge generated can overwhelm conventional computing methods.

- Mannequin complexity and dimension points, as machine studying fashions develop into more and more refined and bigger in dimension.

- Computational useful resource constraints, the place the necessity for high-performance computing assets can result in bottlenecks and efficiency points.

- Financial and useful resource limitations, the place prices and useful resource constraints can restrict the scope and scale of machine studying initiatives.

These challenges spotlight the necessity for environment friendly and scalable machine studying workflows that may deal with the complexities of economic environments.

Designing and Optimizing CPU-Based mostly Machine Studying Workflows

To beat the challenges of scaling machine studying workloads, it is important to design and optimize CPU-based machine studying workflows for high-performance computing. Listed below are some methods to contemplate:

- Use of multi-threading and multi-processing strategies to benefit from a number of CPU cores.

- Using distributed computing frameworks to scale workloads throughout a number of machines.

- Using CPU-optimized machine studying libraries and frameworks, reminiscent of TensorFlow or PyTorch.

- Implementing just-in-time (JIT) compilation and different optimization strategies to reduce overhead and enhance efficiency.

By making use of these methods, organizations can create environment friendly and scalable machine studying workflows that may deal with the calls for of economic environments.

The Position of Distributed Computing and Parallelization

Distributed computing and parallelization are crucial parts of scalable machine studying workflows. By breaking down advanced duties into smaller sub-tasks and assigning them to a number of machines or cores, organizations can considerably enhance efficiency and effectivity.

Parallelization is a key idea in distributed computing, the place a number of duties are executed concurrently, leading to improved efficiency and scalability.

This is an instance of how distributed computing will be utilized in a real-world industrial use case:

Instance: Predictive Upkeep in Manufacturing

Suppose a producing firm needs to implement a predictive upkeep system utilizing machine studying. The objective is to foretell tools failure and schedule upkeep accordingly. The corporate collects information from sensors on the tools and makes use of a machine studying mannequin to foretell failure.

To scale this workflow, the corporate makes use of distributed computing to interrupt down the duty into smaller sub-tasks:

- Information ingestion and preprocessing: A number of machines are assigned to deal with information ingestion and preprocessing, lowering the load on particular person machines.

- Mannequin coaching: The machine studying mannequin is skilled utilizing distributed computing, with a number of machines working collectively to coach the mannequin in parallel.

- Prediction and deployment: The skilled mannequin is deployed in a distributed setting, the place predictions are made concurrently throughout a number of machines.

By making use of distributed computing and parallelization, the corporate can considerably enhance the efficiency and scalability of its machine studying workflow, enabling it to make predictions in close to real-time and scale back downtime.

Integration and Interoperability

Integration and interoperability are essential facets of economic machine studying, guaranteeing seamless communication and collaboration between totally different parts, frameworks, and instruments. In a CPU-based machine studying setting, integration and interoperability allow the environment friendly deployment and scalability of fashions, in the end resulting in improved efficiency and productiveness.

CPU-Optimized Integration Examples

There are quite a few industrial machine studying environments that supply CPU-optimized integration, enhancing the efficiency of CPU-based machine studying workflows. Some notable examples embody:

- Intel OpenVINO: A complete toolkit for AI, pc imaginative and prescient, and machine studying that gives optimized integration for CPU-based workloads. OpenVINO gives a variety of pre-trained fashions and helps well-liked frameworks like TensorFlow, PyTorch, and Caffe.

- Google TensorFlow Lite: A light-weight model of TensorFlow designed for cellular and embedded gadgets, together with CPUs. TensorFlow Lite presents optimized kernels for CPU-based workloads and helps each integer and floating-point information sorts.

- MXNet: An open-source deep studying framework that helps each CPU and GPU acceleration. MXNet gives a unified API for each CPU and GPU-based workloads, making it a gorgeous choice for CPU-based machine studying workflows.

These frameworks and instruments are particularly designed to optimize CPU efficiency, enabling sooner execution of machine studying workloads and lowering the necessity for costly GPUs.

Information Format and Mannequin Format Compatibility

The selection of knowledge format and mannequin format has important implications for CPU-based machine studying workflows. Information codecs like NumPy (ndarray) and Pandas (DataFrame) are well-liked selections for CPU-based workloads as a result of their effectivity and ease of use. Moreover, mannequin codecs like TensorFlow’s SavedModel and PyTorch’s Pickle are extensively utilized in CPU-based machine studying environments.

NumPy and Pandas supply environment friendly information buildings and operations, making them ideally suited for CPU-based machine studying workloads.

CPU-based machine studying frameworks like TensorFlow Lite and OpenVINO additionally help a wide range of information and mannequin codecs, guaranteeing seamless integration with present workflows.

Protocols and Interfaces for CPU-Based mostly Machine Studying

Protocols and interfaces play a crucial position in CPU-based machine studying, enabling environment friendly communication between totally different parts and frameworks. Some notable protocols and interfaces for CPU-based machine studying embody:

- CUBLAS ( CUDA Bindings for Linear Algebra Subroutines ): A set of optimized linear algebra subroutines for NVIDIA GPUs, which can be utilized for CPU-based workloads with minor modifications.

- NCCL2 ( NVIDIA Collective Communications Library 2 ): A high-performance communication library for distributed coaching, which helps each CPU and GPU acceleration.

These protocols and interfaces facilitate environment friendly communication and collaboration between totally different parts and frameworks, in the end resulting in improved efficiency and productiveness in CPU-based machine studying workflows.

Design Issues for Scalable and Environment friendly CPU-Based mostly Machine Studying Ecosystem

When designing a CPU-based machine studying ecosystem, a number of key issues come into play. Some vital design issues embody:

- Scalability: The flexibility to scale up or down relying on the workload, guaranteeing environment friendly use of assets and minimal overhead.

- Effectivity: Optimizing CPU efficiency by means of environment friendly information codecs, mannequin codecs, and protocol implementations.

- Avoiding Bottlenecks: Figuring out and mitigating potential bottlenecks within the workflow, reminiscent of information enter/output or reminiscence entry.

- Flexibility: Supporting a variety of frameworks, instruments, and protocols to accommodate various workload necessities.

By fastidiously contemplating these design components, builders can create environment friendly and scalable CPU-based machine studying ecosystems that meet the calls for of contemporary machine studying workloads.

The world of economic machine studying is quickly evolving, with developments in CPU structure and design, in addition to the emergence of recent applied sciences and developments. As we delve into the long run, it is important to grasp the developments that may form the trade.

Developments in CPU Structure and Design

The CPU is the mind of any computing system, and up to date developments in structure and design have considerably impacted industrial machine studying. Probably the most important developments is the rise of ARM (Superior RISC Machines) and RISC-V (RISC-V Worldwide) architectures. These designs supply improved efficiency, vitality effectivity, and scalability, making them ideally suited for machine studying workloads.

ARM, particularly, has develop into a well-liked alternative for machine studying as a result of its capacity to offer excessive efficiency, low energy consumption, and a variety of gadget choices. RISC-V, however, is an open-source instruction set structure that has gained important traction lately, particularly within the realm of edge computing and Web of Issues (IoT) gadgets.

Key Options of ARM and RISC-V Architectures

* Improved efficiency and vitality effectivity

* Scalability and adaptability

* Big selection of gadget choices

* Open-source nature of RISC-V structure

Position of Area-Particular {Hardware} Accelerators

Area-specific {hardware} accelerators (DSHAs) play a vital position in industrial machine studying by offering devoted {hardware} for particular workloads. DSHAs are designed to speed up particular duties or algorithms, reminiscent of matrix multiplication, convolutional neural networks (CNNs), or recurrent neural networks (RNNs).

These accelerators can considerably enhance the efficiency and effectivity of machine studying workloads, resulting in sooner mannequin coaching, inference, and deployment. Some examples of DSHAs embody:

* Graphics Processing Items (GPUs) from NVIDIA and AMD

* Area-Programmable Gate Arrays (FPGAs) from Xilinx and Intel

* Utility-Particular Built-in Circuits (ASICs) from varied distributors

Advantages of Area-Particular {Hardware} Accelerators

* Improved efficiency and effectivity

* Lowered energy consumption

* Elevated scalability and adaptability

* Devoted {hardware} for particular workloads

Rising Tendencies in Machine Studying Software program

The world of machine studying software program is consistently evolving, with new developments and applied sciences rising yearly. A few of the most promising developments embody:

* Switch Studying: Switch studying is a way that enables machine studying fashions to leverage information from one area and adapt it to a different. This strategy has proven important enhancements in mannequin efficiency and effectivity.

* Mannequin Distillation: Mannequin distillation is a way that entails coaching a smaller, extra environment friendly mannequin to imitate the habits of a bigger, extra advanced mannequin. This strategy can considerably scale back mannequin dimension and enhance deployment effectivity.

* Quantization: Quantization is a way that entails lowering the precision of mannequin weights and activations to enhance effectivity and scale back reminiscence utilization. This strategy has proven important enhancements in mannequin efficiency and deployment effectivity.

Examples and Actual-World Functions

* Switch studying has been utilized in varied purposes, reminiscent of picture classification, pure language processing, and advice methods.

* Mannequin distillation has been utilized in purposes reminiscent of speech recognition, picture classification, and advice methods.

* Quantization has been utilized in purposes reminiscent of picture classification, pure language processing, and advice methods.

Comparability of CPU Architectures and Rising Machine Studying Tendencies, Greatest cpu for industrial machine studying

The next desk gives a comparability of CPU architectures and their relevance to rising machine studying developments:

| CPU Structure | Mannequin Distillation | Switch Studying | Quantization |

| — | — | — | — |

| ARM | Excessive | Excessive | Excessive |

| RISC-V | Medium | Medium | Medium |

| x86 | Low | Low | Low |

Notice: The comparability relies on the present state-of-the-art and will change with future developments.

Remaining Evaluation

A complete understanding of the most effective CPU for industrial machine studying requires a nuanced analysis of varied components, together with CPU structure, core rely, and vitality effectivity. By contemplating these components and choosing the optimum CPU to your particular use case, you possibly can unlock the complete potential of your machine studying purposes.

Key Questions Answered

What’s an important issue to contemplate when choosing a CPU for industrial machine studying?

Crucial issue to contemplate is the CPU structure and its capacity to help parallel processing and matrix operations.

Can AMD CPUs ship comparable efficiency to Intel CPUs for machine studying workloads?

Sure, AMD CPUs have made important strides lately and may ship comparable efficiency to Intel CPUs for sure machine studying workloads.

How do CPU producers optimize their CPUs for machine studying workloads?

CPU producers use a wide range of strategies, together with specialised cores, cache hierarchy optimization, and matrix operation acceleration.